I recently graduated with a Master's degree in Software Engineering from SCS of Carnegie Mellon University and joined AWS as a Software Development Engineer. Before that, I obtained my Bachelor's degree in Software Engineering from Tongji University. During my Bachelor degree, I interned at Shanghai AI Lab and ByteDance AI Lab.

My background in software engineering has provided me with strong engineering skills. Based on this foundation, my primary experiences and interests include but are not limited to:

- NLP: LLM alignment, Low-resource NLP, Distillation

- MLOps: ML Pipelines, Anomaly Detection

You could also refer to the projects page for details. Additionally, I am currently keen to explore the fields of LLMs and MLSys.

I am attending EMNLP in Suzhou, China and NeurIPS in San Diego in Nov and Dec 2025. Feel free to connect with me if you are also attending these conferences! I am open to discussing a wide range of interesting topics, including but not limited to potential collaboration opportunities. Please feel free to reach out if you'd like to have a chat!

Warning

Problem: The current name of your GitHub Pages repository ("Solution: Please consider renaming the repository to "

http://".

However, if the current repository name is intended, you can ignore this message by removing "{% include widgets/debug_repo_name.html %}" in index.html.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "Education

-

Carnegie Mellon UniversityMaster's in Software EngineeringAug. 2023 - Dec. 2024

Carnegie Mellon UniversityMaster's in Software EngineeringAug. 2023 - Dec. 2024 -

Tongji UniversityB.E. in Software EngineeringSep. 2019 - Jul. 2023

Tongji UniversityB.E. in Software EngineeringSep. 2019 - Jul. 2023 -

North Carolina State UniversityGEARS Research ProgramJun. 2022 - Aug. 2022

North Carolina State UniversityGEARS Research ProgramJun. 2022 - Aug. 2022

Experience

-

Amazon Web ServicesSoftware Development EngineerJun. 2025 - Present

Amazon Web ServicesSoftware Development EngineerJun. 2025 - Present -

ByteDance AI LabIntern | Volcano Machine Translation, AI LabMar. 2023 - Aug. 2023

ByteDance AI LabIntern | Volcano Machine Translation, AI LabMar. 2023 - Aug. 2023 -

Shanghai AI LabResearch Intern | AI for Imaging GroupNov. 2022 - Feb. 2023

Shanghai AI LabResearch Intern | AI for Imaging GroupNov. 2022 - Feb. 2023

News

Selected Publications (view all )

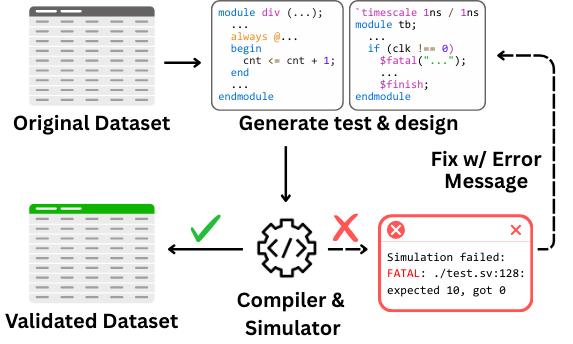

VeriCoder: Enhancing LLM-Based RTL Code Generation through Functional Correctness Validation

Anjiang Wei, Huanmi Tan, Tarun Suresh, Daniel Mendoza, Thiago SFX Teixeira, Ke Wang, Caroline Trippel, Alex Aiken

NuerIPS 2025 Fourth Workshop on Deep Learning for Code 2025

VeriCoder is a model for RTL (Register Transfer Level) code generation, fine-tuned on a novel dataset that is functionally validated via feedback-directed refinement. Unlike prior datasets that only ensure syntactic correctness, our dataset guarantees that each RTL design passes automatically generated unit tests aligned with its natural language specification. Our key contributions include: (1) a large-scale dataset of 125,000+ examples with simulation-passing RTL designs, (2) a feedback-driven construction methodology that iteratively refines designs and tests based on test results, (3) superior performance with up to +71.7% relative improvement on VerilogEval benchmarks, and (4) comprehensive resources including dataset, model weights, inference scripts, and training pipeline.

VeriCoder: Enhancing LLM-Based RTL Code Generation through Functional Correctness Validation

Anjiang Wei, Huanmi Tan, Tarun Suresh, Daniel Mendoza, Thiago SFX Teixeira, Ke Wang, Caroline Trippel, Alex Aiken

NuerIPS 2025 Fourth Workshop on Deep Learning for Code 2025

VeriCoder is a model for RTL (Register Transfer Level) code generation, fine-tuned on a novel dataset that is functionally validated via feedback-directed refinement. Unlike prior datasets that only ensure syntactic correctness, our dataset guarantees that each RTL design passes automatically generated unit tests aligned with its natural language specification. Our key contributions include: (1) a large-scale dataset of 125,000+ examples with simulation-passing RTL designs, (2) a feedback-driven construction methodology that iteratively refines designs and tests based on test results, (3) superior performance with up to +71.7% relative improvement on VerilogEval benchmarks, and (4) comprehensive resources including dataset, model weights, inference scripts, and training pipeline.

SATBench: Benchmarking LLMs’ Logical Reasoning via Automated Puzzle Generation from SAT Formulas

Anjiang Wei, Yuheng Wu, Yingjia Wan, Tarun Suresh, Huanmi Tan, Zhanke Zhou, Sanmi Koyejo, Ke Wang, Alex Aiken

EMNLP Main 2025

We introduce SATBench, a benchmark for evaluating the logical reasoning capabilities of large language models (LLMs) through logical puzzles derived from Boolean satisfiability (SAT) problems. Unlike prior work that focuses on inference rule-based reasoning, which often involves deducing conclusions from a set of premises, our approach leverages the search-based nature of SAT problems, where the objective is to find a solution that fulfills a specified set of logical constraints. Each instance in SATBench is generated from a SAT formula, then translated into a story context and conditions using LLMs. The generation process is fully automated and allows for adjustable difficulty by varying the number of clauses. All 2100 puzzles are validated through both LLM-assisted and solver-based consistency checks, with human validation on a subset. Experimental results show that even the strongest model, o4-mini, achieves only 65.0% accuracy on hard UNSAT problems, close to the random baseline of 50%. SATBench exposes fundamental limitations in the search-based logical reasoning abilities of current LLMs and provides a scalable testbed for future research in logical reasoning.

SATBench: Benchmarking LLMs’ Logical Reasoning via Automated Puzzle Generation from SAT Formulas

Anjiang Wei, Yuheng Wu, Yingjia Wan, Tarun Suresh, Huanmi Tan, Zhanke Zhou, Sanmi Koyejo, Ke Wang, Alex Aiken

EMNLP Main 2025

We introduce SATBench, a benchmark for evaluating the logical reasoning capabilities of large language models (LLMs) through logical puzzles derived from Boolean satisfiability (SAT) problems. Unlike prior work that focuses on inference rule-based reasoning, which often involves deducing conclusions from a set of premises, our approach leverages the search-based nature of SAT problems, where the objective is to find a solution that fulfills a specified set of logical constraints. Each instance in SATBench is generated from a SAT formula, then translated into a story context and conditions using LLMs. The generation process is fully automated and allows for adjustable difficulty by varying the number of clauses. All 2100 puzzles are validated through both LLM-assisted and solver-based consistency checks, with human validation on a subset. Experimental results show that even the strongest model, o4-mini, achieves only 65.0% accuracy on hard UNSAT problems, close to the random baseline of 50%. SATBench exposes fundamental limitations in the search-based logical reasoning abilities of current LLMs and provides a scalable testbed for future research in logical reasoning.

Improving Assembly Code Performance with Large Language Models via Reinforcement Learning

Anjiang Wei, Tarun Suresh, Huanmi Tan, Yinglun Xu, Gagandeep Singh, Ke Wang, Alex Aiken

NeurIPS 2025 Fourth Workshop on Deep Learning for Code 2025

Large language models (LLMs) have demonstrated strong performance across a wide range of programming tasks, yet their potential for code optimization remains underexplored. This work investigates whether LLMs can optimize the performance of assembly code, where fine-grained control over execution enables improvements that are difficult to express in high-level languages. We present a reinforcement learning framework that trains LLMs using Proximal Policy Optimization (PPO), guided by a reward function that considers both functional correctness, validated through test cases, and execution performance relative to the industry-standard compiler gcc -O3. To support this study, we introduce a benchmark of 8,072 real-world programs. Our model, Qwen2.5-Coder-7B-PPO, achieves 96.0% test pass rates and an average speedup of 1.47x over the gcc -O3 baseline, outperforming all 20 other models evaluated, including Claude-3.7-sonnet. These results indicate that reinforcement learning can unlock the potential of LLMs to serve as effective optimizers for assembly code performance.

Improving Assembly Code Performance with Large Language Models via Reinforcement Learning

Anjiang Wei, Tarun Suresh, Huanmi Tan, Yinglun Xu, Gagandeep Singh, Ke Wang, Alex Aiken

NeurIPS 2025 Fourth Workshop on Deep Learning for Code 2025

Large language models (LLMs) have demonstrated strong performance across a wide range of programming tasks, yet their potential for code optimization remains underexplored. This work investigates whether LLMs can optimize the performance of assembly code, where fine-grained control over execution enables improvements that are difficult to express in high-level languages. We present a reinforcement learning framework that trains LLMs using Proximal Policy Optimization (PPO), guided by a reward function that considers both functional correctness, validated through test cases, and execution performance relative to the industry-standard compiler gcc -O3. To support this study, we introduce a benchmark of 8,072 real-world programs. Our model, Qwen2.5-Coder-7B-PPO, achieves 96.0% test pass rates and an average speedup of 1.47x over the gcc -O3 baseline, outperforming all 20 other models evaluated, including Claude-3.7-sonnet. These results indicate that reinforcement learning can unlock the potential of LLMs to serve as effective optimizers for assembly code performance.

All publications

Honors & Awards

-

Second Prize of Citi Cup (Fintech Innovation Application Competition)2022

-

Academic Excellence Scholarship of Tongji University (Top 10%)2022

-

Third Prize in Mobile Application Innovation Competition2021

-

First Prize of East China Region in Mobile Application Innovation Competition of CCCC2021

-

Academic Excellence Scholarship of Tongji University (Top 10%)2021

-

Academic Excellence Scholarship of Tongji University (Top 10%)2020